What Are We Building?

This blog series walks through the making of a fully automated voice assistant for real estate agents. It doesn’t just chat — it actually:

- Recommends homes based on user preferences

- Handles natural multi-turn conversations

- Schedules appointments into an agent’s live calendar

- Confirms details via SMS — all with no human in the loop

We built it using:

- VAPI for real-time voice input/output

- PydanticAI to orchestrate agent logic

- ChromaDB for semantic search over property listings

- n8n for Google Calendar integration and Twilio SMS

This post focuses on the agent setup and prompt engineering — the part that makes the voice assistant feel natural and goal-driven.

← Read Part 1: Building Real-Time Voice AI

🎥 Watch Part 2: Agent Architecture and Prompt Engineering

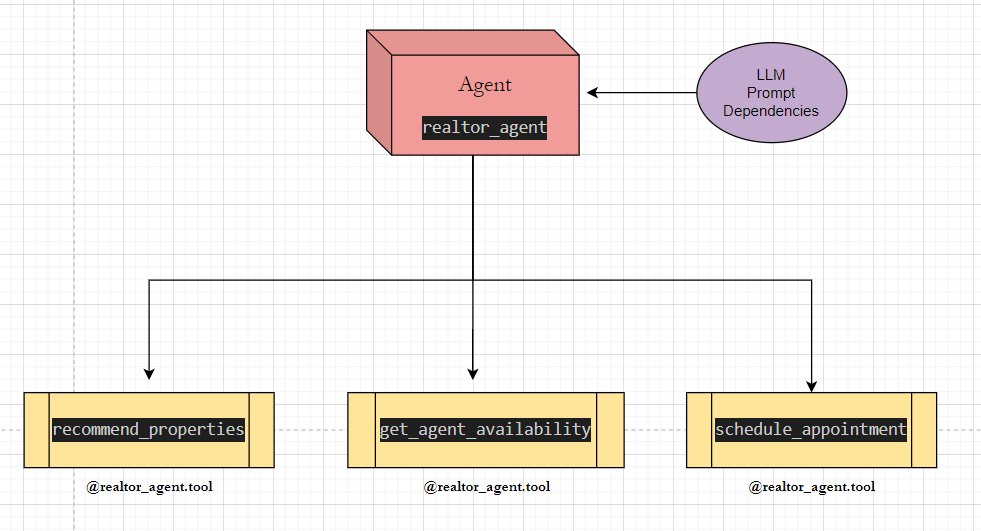

Why We Used a Single Agent Instead of a Multi-Agent Setup

Multi-agent systems are great for complex task decomposition, but we didn’t need that.

For voice interactions, speed and clarity are everything.

We chose a single PydanticAI agent that uses tools for:

- Property recommendation

- Availability lookup

- Appointment scheduling

Benefits:

- ✅ Lower latency — no back-and-forth between agents

- ✅ Easier to debug — everything routes through one decision point

- ✅ Prompt tuning becomes simpler — one personality to guide

This kept our mental model clean: one brain, three actions.

Designing Clean, Stateless Tools

All logic is implemented as tools the agent can call. These tools live in their own Python modules.

Tools in this project:

recommend_properties.py– semantic search via ChromaDBget_agent_availability.py– queries n8n for busy slotsschedule_appointment.py– schedules into Google Calendar via webhook

Each tool has:

- A well-defined input and output (e.g. zip code, time window)

- Logging for traceability

- Validation using Pydantic models

- No side effects — tools are safe to test or retry

By keeping tools stateless and focused, we can scale or replace them without changing the agent.

Passing Configuration with AgentDependencies

Some tools need extra configuration — like database clients, webhook URLs, or scheduling rules.

To keep this clean, we pass those via the AgentDependencies object:

@dataclass

class AgentDependencies:

chroma_client: chromadb.PersistentClient

chroma_db_listings: str

n8n_webhook_url: str

agent_schedule_config: AgentScheduleConfigEach tool accesses what it needs from this shared context.

recommend_properties()useschroma_clientget_agent_availability()usesagent_schedule_configschedule_appointment()usesn8n_webhook_url

This makes the system easy to configure and reason about.

Why We Chose GPT-4o Mini for This Agent

Voice agents demand sub-second responses. If the AI hesitates, it breaks the conversational flow.

We chose OpenAI’s GPT-4o-mini because it consistently delivers high-quality answers with minimal latency. During testing, we found it outperformed even larger models in response speed — without compromising output quality.

We also briefly tested OpenRouter for model routing, but OpenAI’s native GPT-4o-mini gave us better performance for real-time voice.

The System Prompt That Guides the Agent

The system prompt in realtor_agent.py defines how the assistant should behave.

It includes:

- 🎯 Objectives: recommend homes, schedule viewings

- 🤝 Tone: friendly, polite, efficient

- 🧠 Rules: ask clarifying questions, confirm phone numbers, don’t guess dates

- 🔄 Tool usage: only call a tool when you have the needed inputs

This prompt ensures the agent:

- Doesn’t jump ahead before getting zip code or budget

- Offers properties one at a time

- Validates user input (e.g., phone number format)

- Speaks naturally and concisely

Example: The Prompt That Drives Everything

The system prompt guides the agent’s behavior. Here’s a simplified version of what we use:

SYSTEM_PROMPT = '''

You are a friendly and efficient real estate assistant.

Speak clearly, warmly, and to the point.

Your tasks:

1. Collect buyer preferences (budget, bedrooms, location, etc.)

2. Recommend properties one at a time using provided tools

3. Offer to schedule a showing after each recommendation

Always confirm missing information politely.

Never guess dates — let tools handle scheduling.

'''This structured prompt enables the LLM to behave naturally — no need for complex branching logic in the code.

Prompt engineering here replaces complex code branching.

Tool Output Wrapping and Response Shaping

When a tool returns structured data, the agent must turn that into a friendly spoken message.

This is handled directly in the prompt. The agent is told to:

- Speak in full sentences

- Highlight useful property features (e.g., “big yard”)

- List available times clearly

- Confirm bookings with a human tone

Example transformation:

Tool Output:

{ "address": "123 Elm St", "price": "$420,000", "summary": "Cozy 3BR near downtown." }Agent response:

“Here’s one that might work — a cozy 3-bedroom at 123 Elm St listed at $420,000. It’s close to downtown and has a nice yard.”

This makes it feel like a helpful agent, not a JSON reader.

What This Enables

This design allows for:

- 🧩 Modular development (tools, config, prompt are all isolated)

- 🧪 Easy testing with local scripts (e.g.,

chat.py) - 🚀 Natural user experience over real-time voice

It also makes future features easier:

- Outbound calls using same agent + tools

- Preference memory with no code change

- SMS or web chat interface reuse

Watch It in Action

🎥 Watch Part 2: Agent Architecture and Prompt Engineering

💻 View the code on GitHub

📞 Want something like this? Schedule a call

Quick Note on Testing

Before we ever connected this agent to voice, we tested it using a simple REPL chatbot (chat.py).

This script lives in the repo and lets us simulate conversations locally. It’s much faster than listening to audio — perfect for debugging prompts, tool logic, and ChromaDB results before going live.